Photo by Sergey Zolkin on Unsplash

Building a web scraper with Python and Selenium.

A web scraper with Selenium and Pandas library to store the data in a CSV file.

I recently started to feel curious about web scrapers and began to build one.

In this tutorial, we will use Selenium to build our web scraper to search wheat prices and store them in a CSV file using the Pandas library.

First, let's install our dependencies:

pip install selenium pandas

This is the website where we will use our scraper:

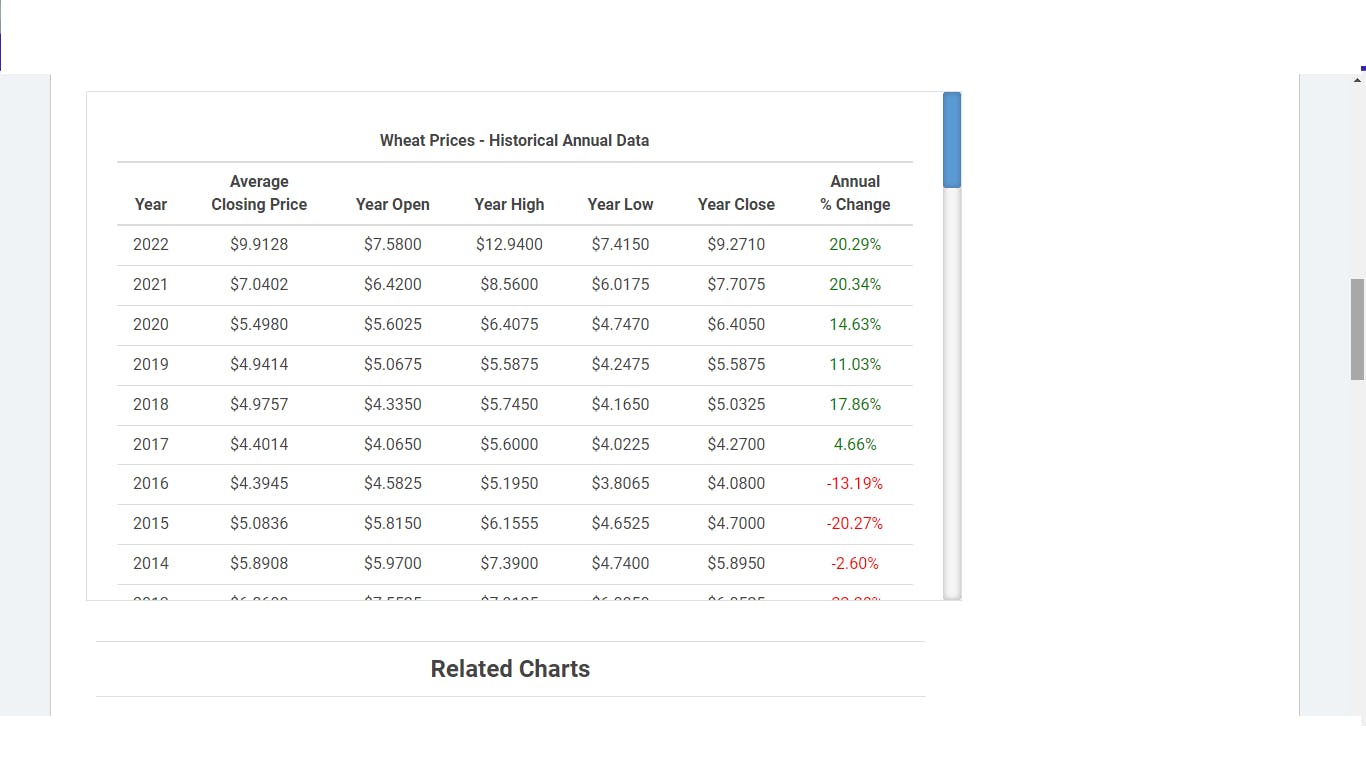

We will use our scraper to extract the historical data from this table:

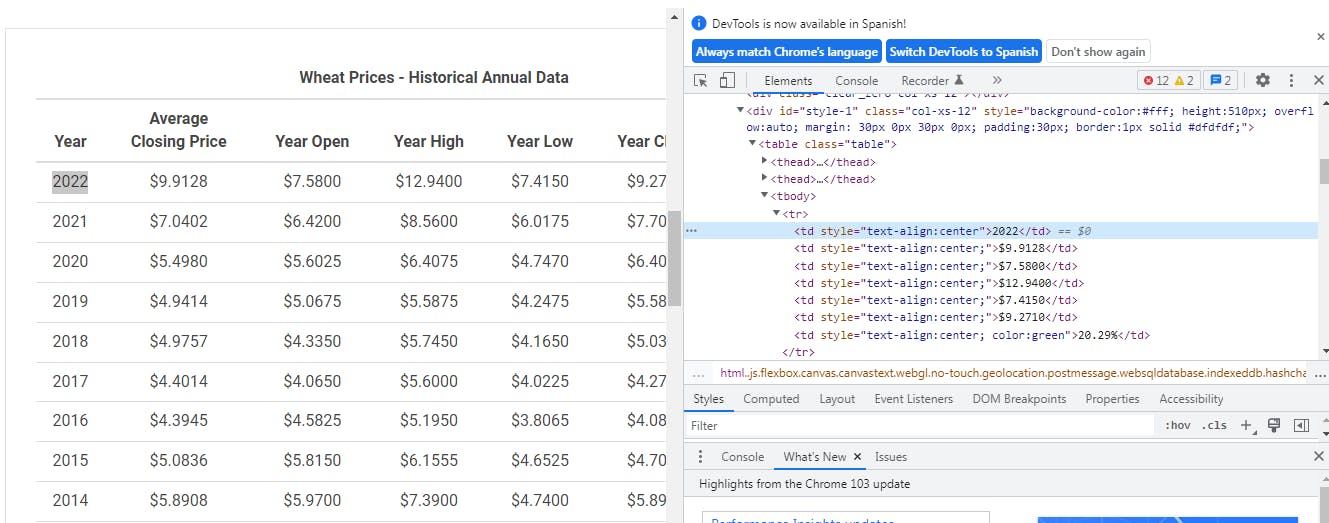

Now we have to identify the HTML tags that contain the data we want to extract.

In the image above we select the page element, we want to extract and use "Inspect"(right-click in the mouse), and it shows us that the < td > tag has the data but the < tr > tag has the row. I think is easier in this case, for learning purposes, to extract the whole row.

main.py

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

from selenium.webdriver.common.by import By

import pandas as pd

options = Options()

options.headless = True

driver = webdriver.Chrome(options=options, executable_path="C:/path/to/chromedriver.exe")

driver.get("https://www.macrotrends.net/2534/wheat-prices-historical-chart-data")

rows = driver.find_elements(By.TAG_NAME, "tr")

list_rows =[]

for row in rows:

list_rows.append(row.text)

df = pd.DataFrame(list_rows)

df.to_csv('prices_wheat.csv')

driver.quit()

We import the packages we are going to use, we initialize the class Options(), and we set headless as true, so our scraper does its job without using Chrome GUI.

Then, we use webdriver.Chrome() and we pass options and the path of where the driver is located as arguments. If you don't have Chrome's driver, here you may download it.

We use the get() function and pass the URL of the site we are going to extract. Then, we define a variable, in this case, rows and use the find_elements() method and pass the tag name we are going to store in the rows variable, in this case

We define a variable as an empty list, then iterate through rows and append every row to the list of rows, we convert the list into a panda's dataframe, using the Dataframe method, and save it as a CSV file. After that, we use the quit() method.

To run this program, run this code in your command line:

py main.py

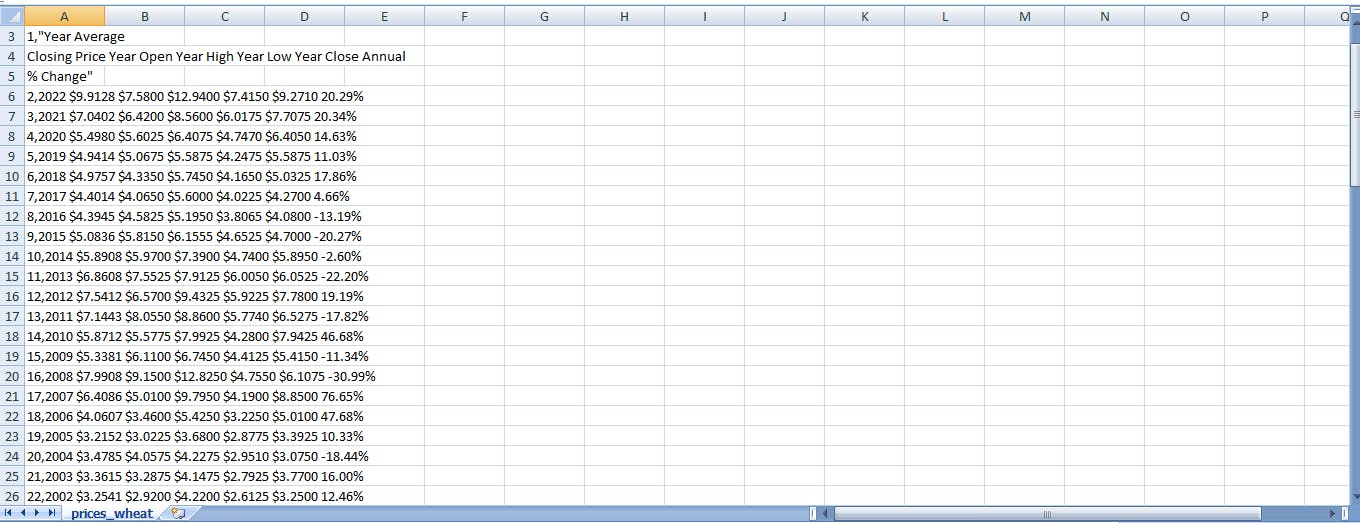

This is the CSV file it will generate.

I will change the code a little bit so every value is separated by a comma.

for row in rows:

list_rows.append(str(row.text).replace(" ", ","))

df = pd.DataFrame(list_rows)

df.to_csv('wheat_prices.csv')

Conclusion

For me, it was the first time I use Selenium, I don't have any experience building scrapers, but I was interested in building one to use the data to fill a database and use it in an API. If you have any suggestions about how to improve the scraper, please leave a comment.

Thank you for taking your time and read this article.

If you have any recommendations about other packages, architectures, how to improve my code, my English, or anything; please leave a comment or contact me through Twitter, LinkedIn.

The source code is here.